This quick HOWTO is a PowerShell script I wrote to monitor concurrent connections to a server. In this case we have a domain controller that is not behaving properly and I suspect it may be due to some kind of port exhaustion. The script is very quick and dirty but since it works I figured I’d share it. Note that the heavy lifting is done by TCPVCON.EXE from Sysinternals (http://technet.microsoft.com/en-ca/sysinternals/bb897437.aspx). I also include the current CPU utilization so I can correlate if during periods of high CPU if we are seeing an unusually high connection count.

Continue reading

Oct 20 2014

HOWTO: Monitor Concurrent Network Connections with PowerShell

Oct 20 2014

HOWTO: Analyze Very Large Text Files with PowerShell and Python

There are countless situations where an IT professional needs to parse through a log file. In most instances, notepad/notepad2/notepad++ are enough to get in, find the information required and get out. But what if your log files are large. As in 20GB a day large. None of the typical editing tools will help you in this case as the files are simply too large to open. In this case, you can switch to more specialized text viewers such as LogExpert. But what if you need to actually manipulate the data in these log files to perform some kind of analysis? In other words, what if you have to review every single line in that 20GB a day file and do something to compare it to some other line? But before you can even do that, you have to reformat the data as the original source includes a bunch of cruft and formatting that you simply don’t want. This is where things get interesting and this is what this blog post will help you to solve.

In this specific scenario, I have a Windows DNS server that is very heavily used by tens of thousands of endpoints. The request from management was to identify which two hour block of time over the course of a week where the DNS servers are least utilized. In addition, there there was a want to know what the top 20 most requested DNS records were during a given 24 hour period. At first blush, I thought this would be fairly simple:

- Enable DNS Debug Logging within the DNS Management Console

- Capture 24 hours worth of data

- Parse the resulting file to extract the date stamps and queries for that time period

- Group the results such that we can find the total number of queries as well as the most popular queries

I initially tried this approach and while it would have worked with smaller files, it failed miserably in this case due to size of the data involved. This DNS server was generating log files in the neighborhood of 240MB per minute. My initial parsing code to extract the query names was taking about 90 minutes to run on each file. As a result, a large number of queries were missed. I eventually realized that if I was going to solve this problem, I was going to have to get clever and optimize.

Oct 13 2014

HOWTO: Windows 10 Technical Preview Feedback

Microsoft has released released a technical preview of its upcoming consumer desktop operating system Windows 10. Anyone can download it (amazingly, you don’t even need to sign in or sign up for anything to grab it!) from here: http://windows.microsoft.com/en-us/windows/preview-iso

I’ve been playing with it for a few minutes now and already I’ve noticed something fundamentally different compared to the Windows 8 preview. Back then when I first loaded it up, I immediately found myself cursing at the new start menu. Unfortunately, other than this blog and various forums, I had no venue to voice my frustrations and certainly no venue where my complaints wouldn’t disappear into the abyss. Flash forward to this Windows 10 technical preview and I was shocked by a dialog box that appeared when I tried to open the control panel. It asked me: "Do you prefer to use the control panel or the PC Settings panel by default?" This wasn’t an OS configuration setting though. No, since this is a preview, this was a feedback prompt. I was being asked not only what I wanted, but given an opportunity to say why!

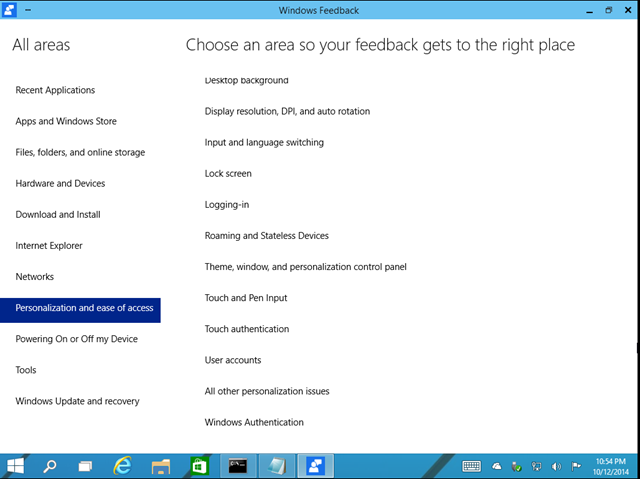

I then started exploring this a little further. I noticed that there is a search icon on the start menu that annoys me and I wanted to remove. Surprisingly (or perhaps not), I discovered there was no obvious means of removing it. That’s when I ran the "Feedback" app right from within Windows 10. After registering my (test) Windows Live account, I found this:

Oct 09 2014

HOWTO: Install a 2 tier Windows 2012 R2 AD Integrated PKI Infrastructure

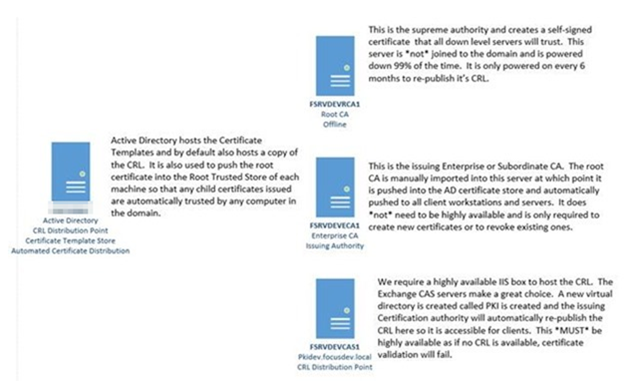

Earlier this year I was fortunate enough to spend the day with Mike MacGillivray, a Professional Field Engineer from Microsoft that specializes in Microsoft Public Key Infrastructure or PKI. During that meeting we ended up building a brand new Microsoft PKI platform in our development environment. I ended up taking a ton of screenshots and documented the process as best as I could. Since I had this content anyway, I have opted to share what I have. Note that I have obfuscated any details that would be deemed company specific. Fortunately, since this was built in our development environment, there isn’t a lot of that. Without further ado, I present a guide to building a Windows 2012 R2 Public Key Infrastructure.

Through this you will build and configure:

-

A Windows 2012 R2 PKI Root Server

-

A Windows 2012 R2 PKI Issuing/Enterprise Server

-

Certificate Revocation Lists (CRLs) published through IIS configured through (in our case) an Exchange Client Access Server (CAS)

-

Certificate Web Enrollment

A high level break down of the environment is shown below:

The remainder of this email describes the specific steps necessary to create and configure a PKI environment. Before we begin you will need:

– Windows 2012 R2 server that is not joined to the domain that will host the offline root certificate. This must be protected at all costs (FSRVDEVRCA1)

– Windows 2012 R2 server that is joined to the domain. This is the enterprise/subordinate/issuing CA and will be used to issue and revoke new client certificates (FSRVDEVECA1)

– Highly available IIS Web Server to host the CRL. We have selected to use our Exchange Client Access (CAS) servers for this purpose (FSRVDEVCAS1)

Sep 19 2014

HOWTO: Verify free space on C: for all servers with PowerShell

We will be applying production windows updates to all of our production servers shortly and I wanted to manually verify that we had at least 2GB free on all of our C: drives in preparation for the update. We have automated monitoring systems but I wanted to grab the information directly to ensure it was accurate. I exported a list of all of our servers into a text file and ran the following PowerShell to produce a list of all of the C: drives free space. I then copied and pasted this into Excel. Quick and dirty but it works great.

$computers = Import-Csv c:\temp\hostlist.txt

$computers | % {

$Results = Get-WmiObject Win32_LogicalDisk -ComputerName $_.ServerList -Filter “DeviceID=’C:'” -ErrorAction SilentlyContinue |

Select-Object Size,@{Name=”FreeSize”;Expression={“{0:N1}” -f($_.freespace/1gb) } }

if ($? -eq $True) { Write-Host $Computer.ServerList $Results.FreeSize }

}

Sep 18 2014

HOWTO: Parse HTML using PowerShell

Unimportant Backstory

Today I was unfortunate to discover that one of the drives in my FreeNAS box failed. I replaced the drive and wanted to watch the progress of the rebuild. If you log into the FreeNAS web management console there is a section that shows you the number of sectors synchronized and the percent complete. But that’s only useful if you stare at it. I want to know if it’s locked up which would require grabbing this value and if it doesn’t change after a certain period, send an email alert.

But before I can do any of that, I need to start with the basics and figure out how to pull the actual HTML from the website so I can parse it and do interesting things like that from there.

Important Part

The code below has the following capabilities:

- Is able to programatically authenticate against any PHP based (and possibly other) authentication mechanisms

- Connects to a specific URL and pulls down all of the raw HTML for that page into a variable for further manipulation

This is certainly a handy snippet to keep in your back pocket!

# This is the URL that when visited with a web browser contains the username and password fields to fill in $LoginURL = "http://yourwebsite.com/login.php" # This is the URL of the page you actually want to pull content from but if accessed directly will normally just redirect you to the login page above $ContentURL = "http://yourwebsite.com/someothercontentthatfirstrequiresauthentication.php" # The username and password used to authenticate with the site above $Username = "hero" $Password = "superman" # Create a new object that pulls the HTML data from the login page including the username and password fields $website = Invoke-WebRequest -Uri $LoginURL # Note the "username" and "password" attributes specified here may have a different name. # Verify by checking the contents of $website.Forms[0].fields $website.Forms[0].Fields.username = $Username $website.Forms[0].Fields.password = $Password # Connect to the login URL and send the login credentials you created as POST and save the resulting session Invoke-WebRequest "$LoginURL" -SessionVariable WebSession -Body $result.Forms[0] -Method Post | Out-Null # Now that we're authenticated, connect to the actual URL you want and pass in the session object you created above $data = Invoke-WebRequest -Uri $ContentURL -WebSession $WebSession # There is a ton of other metadata that is returned that you most likely don't care about. #If you just want the raw HTML to pull some specified content, try using the "outerhtml" property as shown below $HTMLOutput = $data | select -ExpandProperty Parsedhtml | select -ExpandProperty IHTMLDocument3_documentElement | select -expandproperty outerhtml # Display the results to the screen. This will be the raw HTML returned by the site. You can now do whatever you'd like with it. $HTMLOutput

Sep 18 2014

HOWTO: Restore AD Object from 2008 R2 Domain

I am in a situation where I need to delete a critical production Database server computer object in Active Directory for an upgrade but in the event that upgrade fails, I will need to restore the original computer object.

To that end I found an excellent Technet blog on the subject at http://blogs.technet.com/b/askds/archive/2009/08/27/the-ad-recycle-bin-understanding-implementing-best-practices-and-troubleshooting.aspx.

But for those of you that don’t want to read and just want the shortest possible answer, check out below:

Note: The recycle bin must be enabled in advance. If you’ve deleted something before enabling it and wish to restore, I’m afraid you’re not going to be happy

Identify the object to restore

# Identify which objects are available in your recycle bin.

# Note in our case we have many Domain Controllers and so to speed up the process and because I know which DC the object was deleted on, we’re going to specify a specific DC

# This will produce a list of all objects where the most recently deleted object will be at the very end of the list

Get-ADObject -server CORPDC1 -filter ‘isdeleted -eq $true -and name -ne “Deleted Objects”‘ -includeDeletedObjects -property * |

Where {$_.samAccountName -ne $null} | select samaccountname, whenChanged | sort whenChanged

Restore the object

# Once you have confirmed the samaccount name of the object you wish to delete, specify it and pass it to the Restore-ADObject cmdlet

Get-ADObject -server CORPDC1 -filter ‘isdeleted -eq $true -and name -ne “Deleted Objects”‘ -includeDeletedObjects -property * |

Where {$_.samAccountName -eq ‘john.smith’} | Restore-ADObject

Tada! The object is now restored.

Sep 16 2014

HOWTO: Get-ADUser for Display Names

When working with Active Directory from PowerShell, you’ll often find yourself using the Get-ADUser cmdlet. You’ll find yourself often looking up user accounts by typing something like Get-ADUser jsmith which works just fine as jsmith is the samaccountname for a user in Active Directory. But what happens if you want to look up by Display Name? Or more commonly, you are given a list of employees in a CSV and you need to look up those AD accounts. You’ll find that if you try to supply the Display name field in the same way, you’ll get an “Object not found” error. Example:

Get-ADUser jsmith <- Works

Get-ADUUser “John Smith” <- Fails

So, how do you use the Get-ADUser cmdlet to look up users if all you have is their display name? Like this:

Get-ADUser -Filter{ DisplayName -eq “John Smith” }

It has come up enough times I stumble over myself each time that I figured other people must be having the same challenge so I figured I’d document it.

Sep 11 2014

HOWTO: Build Team Shared Documentation Platform

Do you fight to keep good documentation? Do you struggle with others that don’t make any documentation? Do you find the entire process of creating documentation too cumbersome? Do you find situations where you know someone made documentation but you can’t find it? Do you use an web based wiki today that absolutely sucks for handling screenshots? Do you find documentation is in the inbox of your IT employees and when they leave the company, no one can find anything anymore? If any of these conditions are true, what would you say if I could provide a way using nothing more than an email give you and your team a centralized shared document repository with fantastic native inline screenshots using nothing more than Control-C and Control-V? What if you could then share those links with anyone in your organization and as corrections and improvements are made, edit the shared documents using nothing more than Microsoft Word?

I’ve spent a lot of time investigating IT documentation products and I’ve found them all to be incredibly lacking when it comes to screenshot management and formatting with things like tables and colors. Fortunately I’ve now in my opinion at least, solved this issue and found a way to provide brain-dead simple single click documentation that anyone can make. In fact it’s so easy, you can chastise your peers for not doing documentation! Oh and on top of all of this? You can implement it without any additional cost beyond the Windows licenses you already own. That’s right, free! The requirements are:

1) Active Directory Domain Controller

2) Exchange Server

3) Windows Client with Microsoft Outlook and Word

4) Sharepoint 2013 Foundation (aka the free one)

The basic concept is to leverage the already fantastic editor that Outlook provides including its ability to add inline images using nothing more than copy and paste from your clipboard. A user creates a new email and creates their documentation just as they would any other email. Once they are done, they send the message (including just as a cc if desired) to an email address. Sharepoint monitors this email address constantly and takes anything sent to it and adds it to a document library in SharePoint including meta data such as the user that uploaded it. It also indexes the content for search that can be used by anyone in your organization with access to this SharePoint site.

Sep 10 2014

HOWTO: Perform lookup conversions in PowerShell

This HOWTO describes how to use PowerShell to solve for a situation where you have a series of values and you want to have them display a different set of values. Think of a scenario where you get an array that contains country codes such as "CA, US, JP" but you want them to display as "Canada, United States, Japan". Here is how you can do that. Special thanks to Ed Wilson, aka the scripting guy as this was a recent post of his:

http://blogs.technet.com/b/heyscriptingguy/archive/2014/09/10/inventory-drive-types-by-using-powershell.aspx

Consider the scenario where you want to list the types of disks on a system. You find this command:

Get-CimInstance Win32_LogicalDisk | Select DeviceID, DriveType | ft -AutoSize

The output of which looks like:

DeviceID DriveType

——– ———

C: 3

D: 3

E: 2