There are countless situations where an IT professional needs to parse through a log file. In most instances, notepad/notepad2/notepad++ are enough to get in, find the information required and get out. But what if your log files are large. As in 20GB a day large. None of the typical editing tools will help you in this case as the files are simply too large to open. In this case, you can switch to more specialized text viewers such as LogExpert. But what if you need to actually manipulate the data in these log files to perform some kind of analysis? In other words, what if you have to review every single line in that 20GB a day file and do something to compare it to some other line? But before you can even do that, you have to reformat the data as the original source includes a bunch of cruft and formatting that you simply don’t want. This is where things get interesting and this is what this blog post will help you to solve.

In this specific scenario, I have a Windows DNS server that is very heavily used by tens of thousands of endpoints. The request from management was to identify which two hour block of time over the course of a week where the DNS servers are least utilized. In addition, there there was a want to know what the top 20 most requested DNS records were during a given 24 hour period. At first blush, I thought this would be fairly simple:

- Enable DNS Debug Logging within the DNS Management Console

- Capture 24 hours worth of data

- Parse the resulting file to extract the date stamps and queries for that time period

- Group the results such that we can find the total number of queries as well as the most popular queries

I initially tried this approach and while it would have worked with smaller files, it failed miserably in this case due to size of the data involved. This DNS server was generating log files in the neighborhood of 240MB per minute. My initial parsing code to extract the query names was taking about 90 minutes to run on each file. As a result, a large number of queries were missed. I eventually realized that if I was going to solve this problem, I was going to have to get clever and optimize.

The first thing I did was take a look at the data to see if there was a way I could make the resulting log file any smaller. I realized that I was storing 24+ bytes for the date and time. Normally this shouldn’t be a big deal but when you multiply this by the 12 million queries an hour and suddenly you realize the dates alone are costing you almost 300MB an hour that have to later be parsed. I next realized that trying to save everything into one giant CSV was just going to make later processing more difficult. To combat this, I opted to generate each file based on the current time in 24 hour format. File would be named “server-10172014-14.csv”, “server-10172014-15.csv”, “server-10172014-16.csv”, etc.

The next thing I did was take advantage of a wonderful cmdlet in PowerShell called Measure-Command. This cmdlet allows you to run any number of other commands and it will automatically keep track of how long an operation took to complete. This makes it the ideal tool for finding the most efficient technique for processing a given set of data. One of the issues I had to resolve was how to actually write the processed data back out to a file. Because it was so large, it was taking an very long time to write when using the “Export-CSV” cmdlet. Using Measure-Command, I was able to determine that saving a specific large CSV file using Export-CSV took 37 seconds on my laptop. I realized there had to be a better way. I eventually stumbled upon the .NET class “StreamWriter” which effectively gives us low level access to the underline NTFS filesystem. Using this technique, I was now able to save the same file in just over 1 second.

At this point, I had a series of 24 log files per day with each file about half a gigabyte in size. The next question is, how can I determine how many queries happened per day and which queries occurred most frequently. Normally, Excel would be able to answer these questions easily. But once again, due to the size of the data involved, Excel could not even open these files as the 32 bit version is limited to a little over 1 million rows per file. While investigating alternatives, I eventually stumbled across something called “Pandas” or “Python Data Analysis Library”. This made the claim of being able to process hundreds of millions of records very efficiently. This makes it useful for all sorts of data analysis. It took a while to get it working so I figured I’d save you all trouble and simply give you all the links you need to install it:

1) Install Python 2.7 itself

https://www.python.org/ftp/python/2.7.8/python-2.7.8.amd64.msi

2) Install Python for Windows Extensions

http://downloads.sourceforge.net/project/pywin32/pywin32/Build%20217/pywin32-217.win-amd64-py2.7.exe

3) Install Microsoft Visual C++ Compiler for Python

http://www.microsoft.com/en-us/download/confirmation.aspx?id=44266

4) Download Easy Setup for Python

[From PowerShell 3 Prompt] C:\Python27\> (Invoke-WebRequest https://bootstrap.pypa.io/ez_setup.py).Content | .\python –

5) Install Numpy for Windows

http://www.lfd.uci.edu/~gohlke/pythonlibs/#numpy – numpy MKL 1.9.0.win amd64 py2.7.exe

7) Install DateTime Util for Python

C:\Python27\Scripts\easy_install.exe python-dateutil

8) Install Pandas for Python

C:\Python27\Scripts\easy_install.exe pandas

With Pandas, I was able to analyze and group the top 20 results of a 2GB CSV file in under 30 seconds on a 5 year old I5 laptop. Very impressive stuff! Note that the syntax used by Python/Pandas is very unusual for someone coming from say a PowerShell background. But I managed to piece enough of it together and will share the commands I learned below.

Ultimately, here are the 5 high level steps I ultimately came up with in order to parse 60GB a day worth of DNS logs:

- Create a log destination folder on the target machine and enable NTFS compression on the folder to minimize the target disk use. Create sub folders for each Domain Controller to be analyzed

- Run Poweshell script against each of the DNS servers you wish to analyze. This script will record every DNS query made to the server and store the results on the destination server, filtered to only include the query hostname itself. The filename will record the data and time stamp and the times will roll over to a new file

- Leave collecting scripts running for 7 days or for the duration of time you wish to capture

- Once scripts have been stopped and therefore no longer have any locks on any of the CSV files, run CountLogEntries.ps1. This will grab a list of all of the CSV files present and using an optimized .NET technique, count how many lines are in each file which maps to how many queries were made for that hour. It will output the results to the screen. This can then be copied and pasted into Excel and further manipulated to show how many queries per hour per server were made

- With no scripts running, run groupresults.ps1. This will run through all of the generated CSV files for each hour and create a file called [servername]-results.csv that contains all of the grouped data for each file. It does this by calling a Python script that does the heavy lifting of the sorting and grouping. It will produce a list of the top 20 most requested queries from each hour and append those results to a CSV file. From there, the user will import the results into Excel and use the Subtotal function to get the specific totals they are interested in.

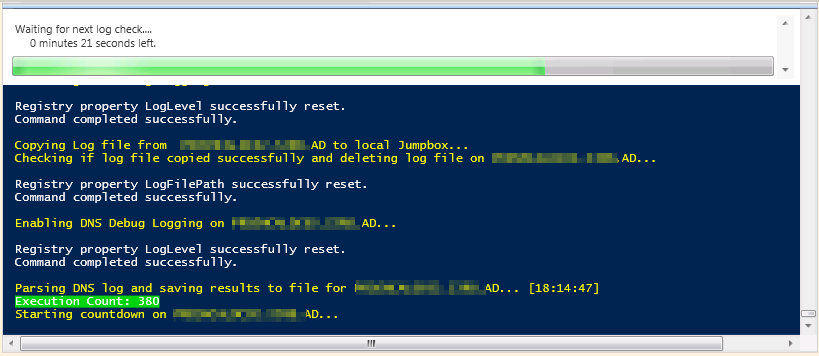

Below is a sample screenshot of what the DNS parser looks like when it’s run inside of the PowerShell ISE:

CaptureDNSLOG.PS1

# Connects to a DNS server, enables DNS debug logging and extracts the queries made against the server to a file

# DNS; Stream Writer; Open File; Fast;

# The location of the psexec.exe tool from Sysinternals that is used to remotely connect to the DNS server to run 'dnscmd' commands

$PSExecPath = "c:\bin\pstools"

# The location of the DNS logs relative to the DNS server running them (ie this is the local path to the DNS log)

$DNSServerLogPath = "d:\dnslogs"

# This path should mirror the path above and is only seperate as we need to reference it via network UNC path

$UNCDNSServerLogPath = "d$\dnslogs"

# This is where the DNS logs will be generated and stored. Recommended to have a different folder for each DNS server but not required

$DestinationLogPath = "d:\scripts\powershell\DNS\LOGS\DC1"

# Generates a progress bar for a specific duration giving time for the DNS server to process incoming queries and write them to the log file

Function Wait-RVCountdown

{

$MinutesToWait = 0.5

$TimeToWait = $MinutesToWait*60

$length = $TimeToWait / 100

while($TimeToWait -gt 0)

{

$min = [int](([string]($TimeToWait/60)).split('.')[0])

$text = " " + $min + " minutes " + ($TimeToWait % 60) + " seconds left"

Write-Progress "Waiting for next log check..." -status $text -PercentComplete ($TimeToWait/$length)

start-sleep -s 1

$TimeToWait--

}

}

Function Gather-DNSLogs ($DNSServerName, $DNSServerIP)

{

$ExecutionCount = 1

# Use the dnscmd.exe command to set the DNS log path. Note the 2> $null prevents false error messages from being displayed by psexec to the console

Write-host "Setting DNS Log File Path on $DNSServerName..." -ForegroundColor Yellow

& $PSExecPath\psexec.exe \\$DNSServerIP dnscmd $DNSServerName /Config /LogFilePath $DNSServerLogPath\$DNSServerName.log 2> $null

Write-host "Setting DNS Log File Max Size on $DNSServerName..." -ForegroundColor Yellow

& $PSExecPath\psexec.exe \\$DNSServerIP dnscmd $DNSServerName /Config /LogFileMaxSize 300000000 2> $null

# 0x6101 effectively enables logging of incoming queries only on UDP

Write-host "Enabling DNS Debug Logging on $DNSServerName..." -ForegroundColor Yellow

& $PSExecPath\psexec.exe \\$DNSServerIP dnscmd $DNSServerName /Config /LogLevel 0x6101 2> $null # Enable Logging

# Designed to loop forever until the administrator stops the logging.

While(1 -eq 1)

{

Write-Host "Execution Count: $ExecutionCount" -BackgroundColor Green

Write-host "Starting countdown on $DNSServerName..." -ForegroundColor Yellow

Wait-RVCountdown

# We need to disable logging so we can copy the file from the DNS server to the local machine for further processing

Write-host "Disabling DNS Debug Logging on $DNSServerName..." -ForegroundColor Yellow

& $PSExecPath\psexec.exe \\$DNSServerIP dnscmd $DNSServerName /Config /LogLevel 0 2> $null # Disable Logging

Write-host "Copying Log file from $DNSServerName to local machine..." -ForegroundColor Yellow

Copy-Item "\\$DNSServerIP\$UNCDNSServerLogPath\$DNSServerName.log" $DestinationLogPath -Force

# Discovered that by simply resetting the LogFilePath, the existing file is deleted which is the behavior we want

Write-host "Checking if log file copied successfully and deleting log file on $DNSServerName..." -ForegroundColor Yellow

if (Test-Path $DestinationLogPath\$DNSServerName.log) { & $PSExecPath\psexec.exe \\$DNSServerIP dnscmd $DNSServerName /Config /LogFilePath $DNSServerLogPath\$DNSServerName.log 2> $null }

Write-host "Enabling DNS Debug Logging on $DNSServerName..." -ForegroundColor Yellow

& $PSExecPath\psexec.exe \\$DNSServerIP dnscmd $DNSServerName /Config /LogLevel 0x6101 2> $null # Enable Logging

# Get the current date and write it to the screen so we can get an idea if it has locked up during a long running execution

$CurrentTime = Get-Date -Format HH:mm:ss

Write-host "Parsing DNS log and saving results to file for $DNSServerName... [$CurrentTime]" -ForegroundColor Yellow

#Include the current hour in 24 hour format and include it in the filename so that each file contains 1 hour worth of data

$TodaysDate = get-date -format "MMddyyyy-HH"

# Use the StreamWriter .NET object as it was determined to be much, much faster than any of the built in PowerShell options. The $true option on the end enables Append to file

$OutputFile = new-object 'System.IO.StreamWriter' -ArgumentList "$DestinationLogPath\$DNSServerName-$TodaysDate.csv",$true

# The ReadCount paramater means read in this many lines before processing. Otherwise it will try to read in the entire file first which is much, much slower

Get-Content "$DestinationLogPath\$DNSServerName.log" -ReadCount 10000 | ForEach-Object {

foreach ($line in $_)

{

if ( $line -match "^\d\d" -AND $line -notlike "*EVENT*")

{

$Query=($line.ToString().Substring(110)) -replace "\s" -replace "\(\d?\d\)","." -replace "^\." -replace "\.$"

if($Query) { $OutputFile.WriteLine("$Query", "Append") }

}

}

}

$ExecutionCount++

# As we are doing our own file management, we have to close our handle once we're done with it

$OutputFile.close()

}

}

# Pass in the DNS name and the IP address of your DNS server. I could have automated this but this works well enough

Gather-DNSLogs DNSSERVER1.CORP.LOCAL 10.1.2.8

CountLogEntries.PS1

$Files = gci "d:\scripts\powershell\dns\logs" *.csv -Recurse

$Files | % {

$LineCount = ([io.file]::ReadAllLines($_.FullName)).count

if($?) { Write-Output "$_, $LineCount" } Else { Write-Output "$_", "File Locked"}

}

GatherResults.PS1

$python="C:\Python27\python.exe"

$MyPythonScript="D:\Scripts\PowerShell\DNS\groupresults.py"

$ResultsFile = "D:\Scripts\PowerShell\DNS\LOGS\DC1-results.csv"

$Files = gci "D:\Scripts\PowerShell\DNS\LOGS\DC1\" *.csv

$Files | % {

& $python $MyPythonScript $_.FullName $ResultsFile

}

GatherResults.py

from sys import argv

script, LogFile, ResultsFile = argv

print "%s" % (LogFile)

import numpy

import pandas

names=['Query']

df = pandas.read_csv(LogFile, names=names)

grouped = df.groupby('Query')

result = grouped.size()

result.sort()

top20 = result[-20:]

top20.to_csv(ResultsFile, mode='a', header=False)

1 comment

Use Long Path Tool, Long Path Tool can simplify and probably end your problems in unlocking, managing and renaming files that appear to have a long filename.