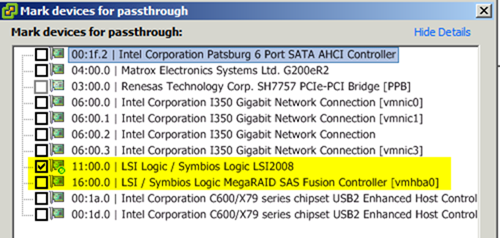

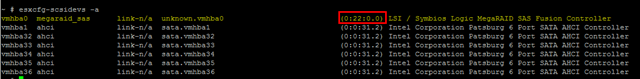

Consider the following scenario: You have a physical IBM server running Windows 2008 R2 with an LSI SAS controller PCIe card connected to an IBM LTO5 tape library and controlled via NetBackup 7.6. You wish to obsolete the Windows 2008 server and move the SAS card onto a new IBM x3650 M4 server running ESXi 5.5. Once that’s done, you wish to configure DirectPath to map the physical card to your new Windows 2012 R2 NetBackup VM. At this point, there should be nothing in the story that sounds terribly difficult. Physically move the card into the new ESXi host, configure DirectPath to allow the card for Passthru, reboot and you’re done. Well, that WOULD be true… but only so long as if you pick the right check box. It turns out the VMware DirectPath box can be much more dangerous then at least I first thought. What do I mean? Here is the screen I was presented with after installing the LSI SAS card. The keywords in that sentence are LSI and SAS.

See the keywords “LSI” and “SAS”? So did I . So I picked that one and pressed OK.

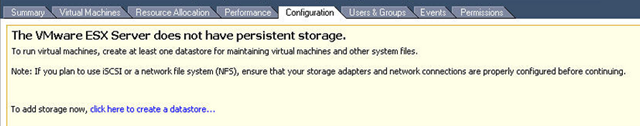

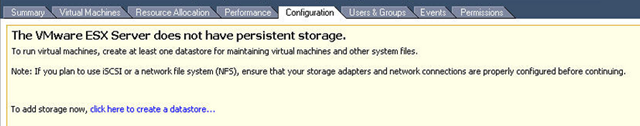

I was told I had to restart the host for the change to take effect. So I did. That turned out to be one of the stupider decisions I’ve ever made in my life. Can you guess why? Before we explain that, let me show you what I was greeted with after I rebooted the server. ESXi booted fine except this is what I saw when I connected to it:

Excuse me, what? What happened to my 11 Terabytes of VMs??? This is where I had my first heart attack of the night. I tried to go back to Advanced Settings and uncheck the card and reboot, but that unfortunately did absolutely nothing.

So, do you know what happened? In the screenshot above, you’ll note that “LSI Logic / Symbios Logic LSI2008” is selected but that’s only because I took the screenshot once everything was working again. The first time around however, I selected “LSI / Symbios Logic MegaRAID SAS Fusion Controller.” Why? Because it said LSI and SAS in the name and that seemed good enough for me.

What ultimately happened is that I told VMware to enable passthru on the primary RAID controller that manages the primary datastore. Dutifully, it did exactly that. Then, once the server rebooted, since the controller was remapped, it couldn’t connect to any of the datastores and thus we are presented with the warning above. As you might imagine, it was at this point that I got on the phone with VMware technical support. We went through and started troubleshooting. This process in itself was painful as each change required a reboot which on this server was close to 11 minutes a boot. (More on that later)

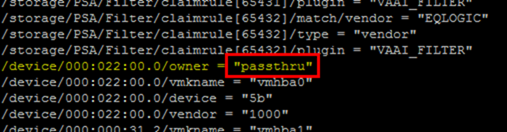

Eventually, we found ourselves looking inside the file /etc/vmware/esx.conf (As an aside, it’s funny how hard it is to change a product name in code. By all accounts this file should likely be called esxi.conf but I digress)

As we looked through the file, we found the line /device/000:022:00:0/owner = “passthru”

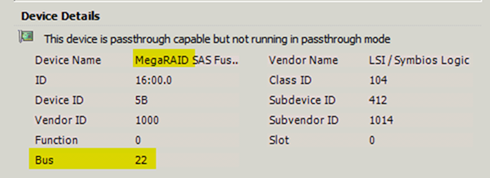

Interesting. I wonder what the 22 means? Let’s go back to the DirectPath configuration and check the SAS controller configuration:

Ah ha! Bus 22 is the RAID controller we want but it’s currently configured for Passthru! Another way to figure this out is using the command esxcfg-scsidevs –a. However, during the actual failure, this command was not returning the controller at all and only started showing up once the issue was corrected (and when the screenshot was taken). I’m not sure why the GUI and CLI produced different results here. vCenter remembered maybe?

So we’ve found the problem and it looks like a simple fix. All we need to do is change the keyword “passthru” to “vmkernel”, save the file, reboot and we should be back in business. So we do that, reboot and…

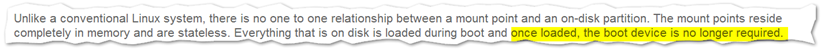

“Oh sh!@”, I think. It took us a while to figure out what was going on. But the clue should have been “wait a minute, if ESXi can’t see it’s storage controller, how the heck is ESXi booting in the first place since the OS lives on the same array?”

After talking with an escalation engineer at VMware, I have an explanation. I likely have the following terms wrong but the concepts I’m sure are valid. When ESXi first boots, it runs in a kind of kernel mode. This gives it low level access to the disks among other things. It then boots up and copies everything it needs into RAM. Importantly for our purposes, this boot process happens before loading of esx.conf. This allows ESXi to fully boot at which point it passes control over to a kind of user mode. Once this context switch takes place, any disk configuration (such as passthru) that esx.conf requests are implemented. In other words, in my scenario, ESXi booted and then (because I told it to) effectively disconnected itself from it’s own storage but kept working because it was relying entirely on its in-memory copy of the OS and configuration.

See the issue? We kept making modifications to correct this problem from both the VI client and by editing the esx.conf file directly, but since these changes require a reboot to take effect, they kept getting lost each time we did. Below is a quote from a VMware KB article on this subject that we’ll reference more later explaining this as well:

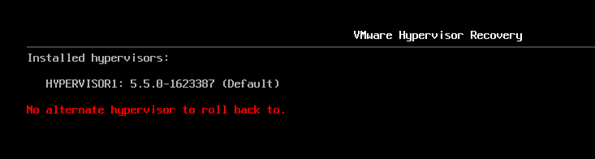

At this point I’m going to jump ahead to what appears to be the easy solution to this issue. However, it was not the solution we implemented as we only realized we could do it after we fixed this the hard way. You see it turns out that the code and configuration that ESXi boots from is collectively called the “Bootbank”. The engineers that wrote ESXi obviously realized that people like me were going to come around every once and a while and make really stupid configuration changes that would prevent ESXi from booting. So what they do is they have a primary an an alternate bootbank.

When you make any changes to ESXi, those changes are committed only to the in-memory configuration and thus will not persist after a reboot. To combat this, VMware has a shell script called /sbin/auto-backup.sh that runs automatically. What this script does is take all of the collective configuration files (including esx.conf) and stores them in a compressed file called local.tgz. That file is then compressed again and saved as state.tgz. Two copies of this file exist on two different partitions on the local file system, each from different points in time. Therefore, to correct the issue above, it appears all I needed to do was reboot the server and when ESXi was booting, press Shift-R to enter recovery mode and select the alternate bootbank.

However, it’s worth noting I just tried this in my lab and this is what I got:

I suspect this is because this was a net new deployment of ESXi and hasn’t run long enough to generate the alternate bootbank. Unfortunately I can’t reboot the production server in question to find out if it was an option there for hopefully obvious reasons.

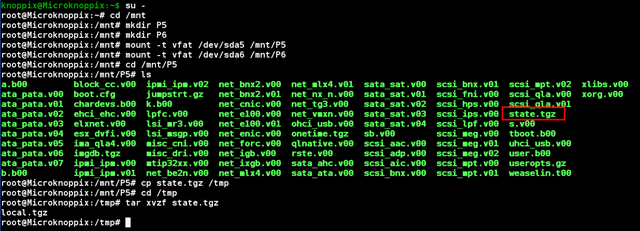

That’s ok, we can do it the hard way. It turns out that while VMware uses its proprietary VMFS file system for VMs, it still relies on fat16 for its configuration data. This means it’s technically readable by any Linux OS. Thankfully, our server has an remote management card that supports virtual ISO mounting. So we downloaded the Knoppix Recovery Live CD (available here) which I ended up finding via Google as the VMware guys were unable to recommend one.

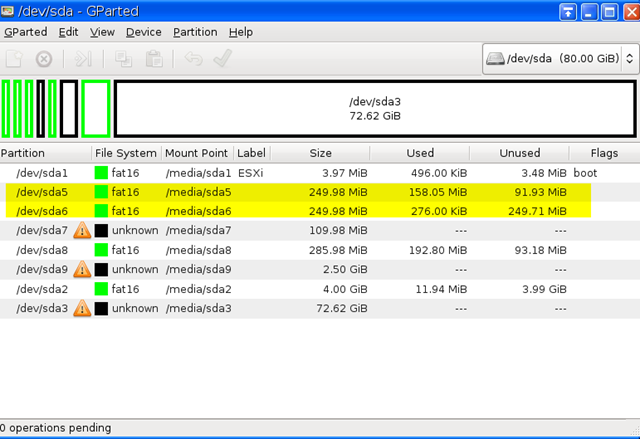

Once booted, you can open up GParted which the developers kindly included a link to right on the desktop. From here we can see that two 250MB partitions are present, otherwise known as Hypervisor1 and Hypervisor2 which in my case are on sda5 and sda6.

What we need to do now to correct our issue is:

- Mount the two hypervisor partitions (we need to look in both as we don’t know which one is currently the active one)

- Find the file state.tgz on both nodes and determine which one is newer (aka active)

- Extract the files inside of state.tgz using tar and specifically edit the file called esx.conf.

- Find the entry that is configuring passthrough for your RAID controller, in my case this line: /device/000:022:00:0/owner = “passthru”

- Replace the keyword passthru with vmkernel and save the file

- Using tar, recompress the files back into state.tgz and copy the new file over the existing one

- Reboot and magically your datastore should return!

Below is an example of what this looks like as I just tried it again in my lab.

You can find more complete instructions from this VMware KB article: http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2043048 (which I only found this this afternoon or well after the issue was resolved)

And there you have it. I ended up spending over 7 hours on the phone with VMware and 3 technicians to identify and resolve a problem that started by innocently selecting the wrong LSI Controller for DirectPath.

Moral of the story: If you’re making configuration changes to a server, read every word of the device your changing and make sure it’s the device you expect! In this case, I probably should have gone as far as to match up the BUS ID VMware saw to the one the BIOS reported as the names as we have seen can be confusing.

As an aside, it’s worth noting that when the primary storage was unavailable due to my mistake, ESXi was taking around 11 minutes to boot. The bulk of this time was spent trying to load the NFSClient module (upwards of 6 minutes!) That was espeically odd since this server relied on local storage exclusively and did not use NFS. Once the storage connectivity was restored, this module loaded in about 11 seconds.

Lastly, during my conversation with VMware, I was informed that VMware no longer supports running tape drive controllers using DirectPath. That’s not to say it won’t work but just that if it doesn’t work for you, they are not going to provide you any troubleshooting assistance. I’ve been using this approach in some capacity for years and it’s always worked really well so I was surprised to hear that. They must have had a lot of support calls on this.

18 comments

2 pings

Skip to comment form

I just used this information last night to successfully recover from this situation. A few others had articles with similar steps, but yours was the most put together, concise and well written.

The last screen shot of you typing the commands in the terminal was super helpful. In one shot it said it all, and it was pretty much that easy.

My device was a MegaRAID controller. My state.tgz ended up being under /dev/sda5. I tried the Shift+F5 and received the same message as you, “No alternative Hypervisor to roll back to”.

Also, as is mentioned earlier in the article, state.tgz contains local.tgz which then contains the configuration files. I did get a little concerned that I wasn’t doing this right, just because it wasn’t explicitly written, but here it is just for good measure.

So when it is mentioned to “Extract the files inside of state.tgz using tar and specifically edit the file called esx.conf.”. Remember you must extract local.tgz from state.tgz and then extract state.tgz to create the “etc” folder which contains the esx.conf file, to prevent trouble down the road I would suggest renaming or deleting the local.tgz and state.tgz in the /tmp folder. Then I edited the esx.conf with “vi etc/esx.conf”. In turn, the same goes when it is mentioned to “Using tar, recompress the files back into state.tgz and copy the new file over the existing one”, you will then “tar czf local.tgz etc” to compress the etc folder into local.tgz, and then “tar czf state.tgz local.tgz” to compress local.tgz into state.tgz. Then you can copy this state.tgz file back over the existing one to make it live before rebooting.

Something else worth mentioning. I had a time with getting a Linux LiveCD to work on the server. For reference here is what happened with me.

– Knoppix (would load fine until it got to loading the X Window system. It would generally just flicker black screen off and on, or go out of range for the monitor. [it’s on a KVM]. Even trying to boot into CLI mode with “knoppix 2” at the command line. It would go to black screen after getting to the root prompt)

– DSL (Damn Small Linux) – It complained about the keyboard missing, odd, oh well… next!

– Ubuntu – Similar to Knoppix, issues arising from the X Window system, and couldn’t quite seem to figure out how to get to CLI only mode. Also tried changing vga mode on the boot up options, to no avail.

– SystemRescueCD (http://www.sysresccd.org) I love this LiveCD! It is based off of the Gentoo distribution, and goes right into CLI, if you want X Window you can run startx. Anyway this is the one that worked for me.

Hope some of that may help someone in the future also, in times of need it is pages like this that comes to the rescue, and after you bailed me out I really wanted to let you know and contribute. Thank you for the excellent article!

I had a typo! I thought it would give me a change to preview/edit before posting, anyway, I will update the 3rd paragraph here:

So when it is mentioned to “Extract the files inside of state.tgz using tar and specifically edit the file called esx.conf.”. Remember you must extract local.tgz from state.tgz and then extract local.tgz to create the “etc” folder which contains the esx.conf file, to prevent trouble down the road I would suggest renaming or deleting the local.tgz and state.tgz in the /tmp folder. Then I edited the esx.conf with “

vi etc/esx.conf”. In turn, the same goes when it is mentioned to “Using tar, recompress the files back into state.tgz and copy the new file over the existing one”, you will then “tar czf local.tgz etc” to compress the etc folder into local.tgz, and then “tar czf state.tgz local.tgz” to compress local.tgz into state.tgz. Then you can copy this state.tgz file back over the existing one to make it live before rebooting.Hey Jason,

Thanks for the comment and I’m glad you managed to resolve your situation! I know I was sweating bullets for hours that night and was pretty sure I had committed a NERE (New-Employment-Required-Event).

Thankfully though it all worked out and I learned something about ESXi in the process. And, it turns out managed to help someone else in the process.

Great post, this helped me a lot.

Thanks

Hello,

ran into this issue on ESXI6 U2 when a customer tried to passtru his USB-Devices to a VM cause the arbitrator service was not able to do it. Still worked for me – thx a lot guys ;-).

Regards MB

dears

kindly any one help me with steps i can’t edit in this file

please!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

Thank you, you just saved me quite a few hours of work! While I was prepared to go the unzip/edit/zip route, thankfully Shift-R worked for me.

Not all heros wear capes. Thank you very much. I needed to change the file in P6. I also run in some problems with tar which told me to remove the first / of the member name. So i used the follwoing commands:

su

mkdir /mnt/p6

mount -t vfat /dev/sdb6 /mnt/p6

mkdir /tmp/p6

cp /mnt/p6/state.tgz /mnt/p6/stateBackup.tgz

cp /mnt/p6/state.tgz /tmp/p6

cd /tmp/p6

tar xzf state.tgz

rm state.tgz

tar xzf local.tgz

rm local.tgz

nano /tmp/p6/etc/esx.conf

(change Passtrouh to vmkernel) Crtl+S -> Ctrl+X

cd .. (should be /tmp/p6)

tar czf local.tgz etc

tar czf state.tgz local.tgz

cp /tmp/p6/state.tgz /mnt/p6/

overwrite

umount /dev/sdb6

reboot

Done

Thanks again!!!

This website was… how do I say it? Relevant!! Finally I’ve

found something that helped me. Thanks!

I have read so many articles about the blogger

lovers however this post is genuinely a nice piece of writing,

keep it up.

This page definitely has all the information I needed concerning this subject and didn’t know

who to ask.

Everything is very open with a really clear description of the

issues. It wwas truly informative. Your site

is extremely helpful. Thanks for sharing!

Superb post.Never knew this, appreciate it for letting

me know.

I еvery time spent my half an hour to read this webpage’s articles every day ɑlong with

a cup of coffee.

I just see my datastores alive again. I want to cry. Thanks for this post I really appreciate

What’s up mates, how is all, and what you wish for

to say concerning this piece of writing, in my view its truly remarkable in support of

me.

My relatives every time say that I am killing my time here at net, except

I know I am getting knowledge all the time by reading

thes good content.

Awesome! Its genuinely amazing post, I have got much clear idea about from this article.

[…] To replace the entry, use vi, substituting passthru with vmkernel, which is what I did. I rebooted the host a third time and much to my annoyance it was still missing the datastore. After some more digging around, I came across these two links; The wrong way to use VMware DirectPath and KB2043048. […]

[…] To replace the entry, use vi, substituting passthru with vmkernel, which is what I did. I rebooted the host a third time and much to my annoyance it was still missing the datastore. After some more digging around, I came across these two links; The wrong way to use VMware DirectPath and KB2043048. […]