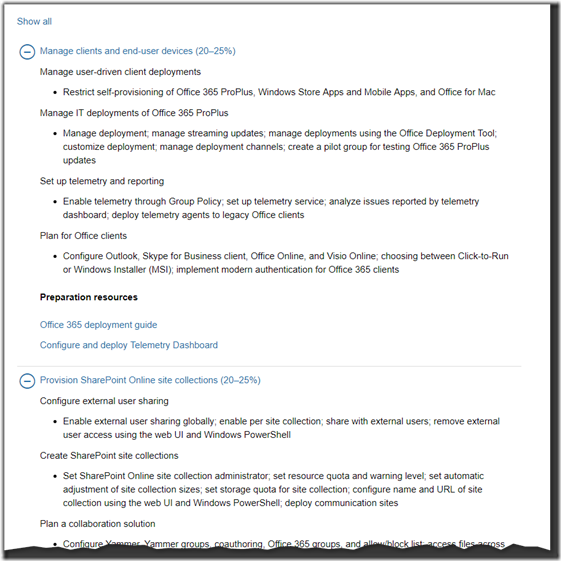

Are you studying for a Microsoft certification exam? If so, then you are probably familiar with the “Skills Measured” website that describes what will be covered on your exam. If not, it looks something like this:

I’m currently studying for my 70-347 – Enabling Office 365 Services exam. The skills measured list above is a great starting point to use to determine what to search for and study and experiment with in your lab. However the layout of the website has never appealed to me. I would much prefer the text in Excel where I can annotate and color code it. Unfortunately you can’t just copy and paste the text from the website into Excel as the HTML formatting makes everything a mess.

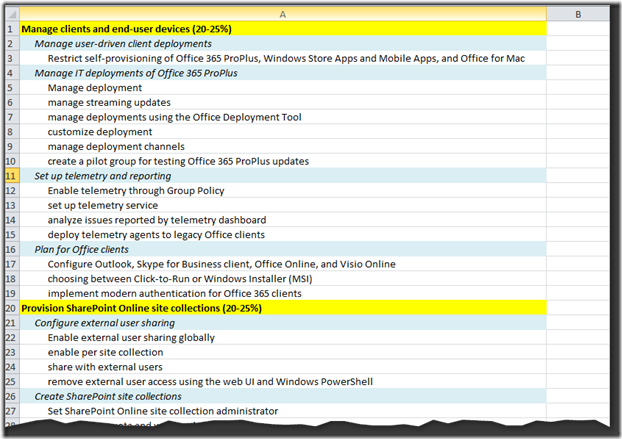

To solve this, I wrote a short PowerShell script that directly downloads the HTML for the specified exam, extracts out all of the exam content and then dumps it into Excel. I’ve found this very useful in my studying so I figured I’d share. To give context, running the script turns the above screenshot into this*:

* The colors aren’t included since it returns CSV data but it takes only a few seconds to color the entries as you prefer which is a huge time saver over trying to do so manually.

I’ve tried it on a few different exam pages and it seems to be robust enough to work with all the ones I’ve tried. If this is something you think, you might find useful, the PowerShell script is below:

# Connect to Microsoft's training website for the specified exam and extract the exam cirriculum into an Excel file

# Home; Exam; Microsoft

# We will save all of the line numbers and strings into this variable so we can later sort them in the order they appear on the webpage

$Results = @()

#region DOWNLOAD AND PREFORMAT HTML FILE

# Enter the URL for the Microsoft exam you wish to parse and convert to Excel

$ExamURL = 'https://www.microsoft.com/en-us/learning/exam-70-347.aspx'

# Connect to Microsoft's website and download the raw HTML for the exam we wish to parse

$Webpage = Invoke-WebRequest $ExamURL -Method Get -DisableKeepAlive

# The exam contents we are interested in start in the HTML with a <dl> tag that appears to be unique only to the content we want so we will extract just the contents between this tag

$Page = ($webpage.ParsedHtml.getelementsbytagname('dl')) | select -first 1 | select -ExpandProperty innerhtml

# Clean up the HTML to make it easier to work with

$Page = $Page -replace "`n","" # Remove carriage returns so text that currently splits over two lines is joined properly for later processing

$Page = $Page -replace '\s+', ' ' # Remove any extra spaces that contain more than one space

$Page = $Page -replace ">",">`r`n" # With the HTML cleaned up, insert a carriage return after each ending '>'HTML tag so to make the results easier to parse

$Page = $Page -split "`r`n" # Convert the text into a PowerShell object such that each line is its own row in the object

#endregion

#region LEVEL 1 - SECTION DIVIDERS

# The section dividers are contained in a string that contains the keyword Syllabus. We look this up and then save the line number, the entry contents and some positioning Information

($Page | select-string "#syllabus").LineNumber | % { $Results += [pscustomobject]@{Linenumber = $_; MSText = $Page[$_]; Position=0; Level=1 }}

# Modify the output to clean it up and remove unwanted characters

ForEach($Entry in $Results)

{

$OriginalLine = $Entry.MSText

$NewLine = $OriginalLine -replace "</a>","" # Included at the end of some lines so we'll remove it

$NewLine = $NewLine -replace "â","-" # This shows up for unknown reasons in the output so we need to remove it

$NewLine = $NewLine -replace [char]128, "" # For unknown reasons the character used for the '-' between the percetages in the section headers return garbage output so we need to filter out them out

$NewLine = $NewLine -replace [char]147, "" # For unknown reasons the character used for the '-' between the percetages in the section headers return garbage output so we need to filter out them out

$Entry.MSText = $NewLine

}

#endregion

#region LEVEL 2 - TOPIC DIVIDERS

# All of the topic dividers include <ul> so we will sue that to identify them

($Page | select-string "<ul>").LineNumber | % {

$OriginalLine = $Page[$_-1] # We want to grab the text one line before the <ul> as that contains the topic divider

$NewLine = $OriginalLine.trim()

$NewLine = " $NewLine" # We use fixed spaces isntead of tabs as Excel doesn't support tabs and we want the text to be indented in Excel when we export it

$Results += [pscustomobject]@{Linenumber = $_-1; MSText = $NewLine; Position=0; Level=2 }

}

ForEach($Entry in $Results)

{

$OriginalLine = $Entry.MSText

$NewLine = $OriginalLine -replace "<ul>","" # Modify the returned lines to remove the any standalone <ul> HTML tags

$Entry.MSText = $NewLine

}

#endregion

#region LEVEL 3 - ITEMS TO STUDY

# The individual items to study are found after the UL tag so we look grab the line after that

($Page | select-string "<ul>").LineNumber | % {

# Each item to study is semi-colon delimited so we break this apart so each item to study gets its own row

$OutputObject = $Page[$_+1] -split ";"

# We need to record the order in which the individual study items are recorded so when we display the final object, we can sort by this order, ensuring our order matches the original

$i=0

ForEach($Entry in $OutputObject)

{

$Entry = $Entry.trim()

$Entry = " $Entry"

$Results += [pscustomobject]@{Linenumber = $_+1; MSText = $Entry; Position = $i; Level=3 }; $i++

}

}

# Modify the returned lines to remove the any standalone </i> HTML tags and to remove the tabs

ForEach($Entry in $Results)

{

$OldLine = $Entry.MSText

$NewLine = $OldLine -replace "<ul>",""

$NewLine = $NewLine -replace "</li>",""

$Entry.MSText = $NewLine

}

#endregion

# Export the content, sorted by line number so the order matches that from the website and save the text entries to the clipboard so they can be pasted into Excel

$Final = $Results | sort linenumber, position | select mstext

$Final.mstext

write-host "Copied content to Clipboard. You can now paste into Excel for further processing" -ForegroundColor Green

$Final.mstext | clip