March 31st was World Backup Day (http://www.worldbackupday.com) This special date is intended to help raise awareness to the importance of having backups of your data. In my case, having served in the role of a Systems Administrator for many years now, I’ve repeatedly been saved by some kind of backup in my professional life and so have translated that knowledge into backing up the data of my personal life.

When you start thinking about and trying to implement backups in the home however, one issue quickly becomes apparent. You can run all the backups in the world, but if you’re just writing to other places in your house, your data will still be lost in the event of a fire or burglary. That’s when you start thinking about off-site backups. Now this is a lot easier than it used to be thanks to the proliferation of cloud based file and backup services. But any of these services require by definition that you place your faith into the continued operation and security of a third party entity. For many people, this is a more than acceptable compromise considering to the ease of use of these products. But what do you do if you are one of those people that would sleep better at night if you knew you had complete control over the hardware on the other end?

The next problem that raises its head as you venture into the world of offsite backups is network bandwidth. The reality is, it almost doesn’t matter if said cloud provider is offering you 10TB of space on their servers if you only have a 10mbit Internet connection as it simply takes far, far too long to move the data around at those speeds to be practical. But that’s fine because for a large number of users, if they analyze the contents of their hard drives, they will find most of it can be replaced from other sources. Operating System files can be reinstalled from an ISO, Steam games can be redownloaded, movies and music can be re-downloaded from the source you obtained them from. Really, when you get down to it, you’ll likely find that your data requirements are likely much smaller than your used disk space would suggest. If you then do a risk assessment on what you don’t want to lose versus cannot lose, you’ll likely find that you have a handful of documents, spreadsheets, and pictures that you consider truly irreplaceable.

Now that we’ve dropped the file size requirements down considerably, some new avenues for off-site backups begin to emerge and that is what brings us to the purpose of today’s HOWTO.

Chances are, we all know someone with an internet connection. It could be a family member, a relative or a friend. Chances are also good that this person has a 4 port router for their Internet and that it has 1 or more ports free. What if we could with an incredibly minimal foot print leverage one of these ports for our offsite backups? That’s what I’m going to show you how to do right now.

It all started with a happenstance device I found on Amazon called the NAS2U Adapter from Addonics.

The device was intriguing in its simplicity. Plug in a ethernet cable into one end and a USB mass storage device into the other and suddenly you have an instant Samba/FTP server based NAS. I found the idea intriguing enough that I bought one just to experiment with. They are selling for around $40US on the American Amazon store but unfortunately the mark up in Canada is significant as this unit cost me $82.92 with shipping. Once it did show up, lets have a look at it, shall we?

As you can see, the device is incredibly small, about half the width of a standard CD. So what can we do with this? As I mentioned earlier, it’s likely that you only have a handful of files that you absolutely cannot lose. In my case, those work out to be about 50MB in size. Which brings us to the first complaint people have about this device that for my situation at least is irrelevant. You see, the performance from this thing is, shall we say, pretty horrible. I’ve maxed out at 3mb/s reads and only 1mb/s writes. Sounds pretty crappy, right? But again remember that in my case (and I suspect in others if they really prioritized their data), I’ve only got about 50MB to work with. More importantly though, the offsite destination I’ve opted to use will be my parents house and they only have a 10mbit/512k connection. So the reality is it doesn’t matter how fast the device can read since my bottleneck is going to be the destination internet connection itself. The other limitation people have complained about is that the USB device used must be formatted FAT32. Again however, since I don’t need to store any files over 2GB, that does not impact me.

This brings up the next point. What do you use for storage? Well since we have identified very small storage requirements, really any USB key will work. In my case, I have a literal stack of USB keys provided as gifts from various vendors over the years. This ends up happily solving another problem of how to handle storage failures. I have experimented with this device where I’ve copied a bunch of data to it, pulled out the key and stuck it directly into my computer. I can read the data just fine. I then pull out the key and stick in a different key and effectively instantly it shows up as expected. This means that it’s trivial to swap keys which is handy as it means I can provide a stack of USB keys to my parents and if I detect a failure I can simply call them and ask them to swap the keys. The question now is, how do I detect a failure? We’ll address that shortly.

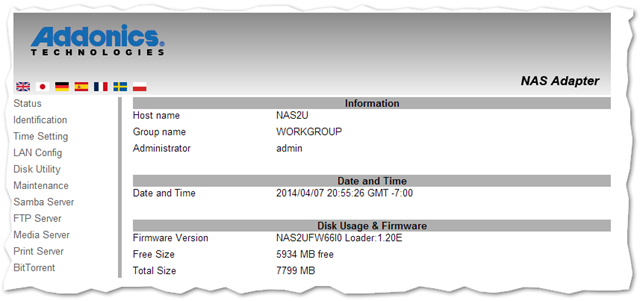

Now let’s have a look at how the device itself functions. By default, it will grab a DHCP address and then provide both Samba and FTP through anonymous read/write access. From there you can UNC into it by using \\DHCP_assigned_IP_Address, by FTP at DHCP_assigned_IP_address or connect to its web management interface using the its IP. Let’s have a look at that management interface now, shall we?

As you can see below, there isn’t a lot to this thing but in my eyes at least, I see that as a plus. Fitness for use and all that.

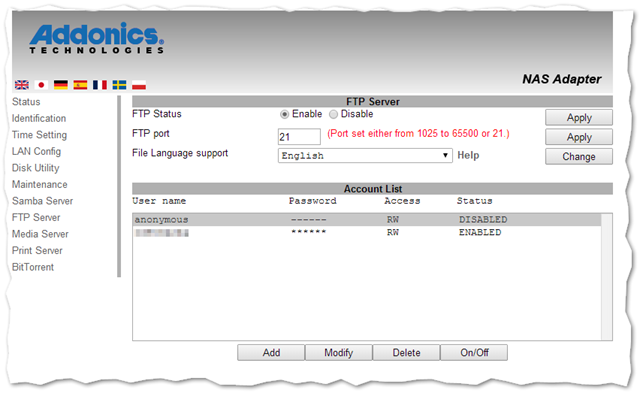

In our case though, we want to disable anonymous authentication and add a new user. Recall that this account will be public Internet facing. But don’t worry, we’re not going to rely on FTP as our security mechanism as you’ll see in a moment.

So we now have our offsite storage configured but what do we backup, how do we back it up and how to we protect the data from unauthorized sources? The specifics to these questions will have to be addressed in a separate blog post but for now, I can tell you the high level I use to great success:

- Use Macrium Reflect (www.macrium.com) to perform an image level backup of a single critical folder. This has the advantage of fully supporting Volume Shadow Copy (VSS) which means the backups can succeed even if the files are in use

- Use the built in AES encryption of Macrium to protect the resulting backup files

- Take a second backup of the really, really critical files using 7zip also applying encryption and a password to that file. (The thinking here is that if Macrium goes out of business and my copies of the installer don’t work anymore for some reason, I can s till recover the really important data. Overkill? Probably. But again, the files are tiny and relatively easily managed so why not)

- Run a Powershell script as a Windows scheduled task that identifies if the file is a daily, weekly or monthly backup and renames the files accordingly. The script then sends the encrypted files via FTP to the NAS2U device at the remote site

Put another way, lets summarize what you’d need to do to get this working for yourself:

- Purchase the Addonics NAS2U from Amazon

- Purchase or obtain two or more 4GB+ USB Keys

- Bring NAS2U and USB Keys to your offsite location (ie Parents house)

- Configure their router to provide a DHCP reservation (or static if you prefer) to the NAS2U

- Configure their router to port forward FTP traffic to that IP address

- Connect a USB key to the device. If it wasn’t formatted FAT32 already, use the format utility built into the WebUI

- Configure their router to use a Dynamic DNS service (ie dyndns) so that you have a consistent name to use to connect to this device. For demonstration purposes, let’s call it nas2u.dyndns.org

- Connect to the web interface of the NAS2U, disable anonymous FTP access and create a new FTP account

- Go home as your DR site is now configured (wasn’t that easy?)

- From your home computer where the source data lives, open up an FTP client and verify you can connect to nas2u.dyndns.org

- It works? fantastic!

- On your source PC, place all of your critical data into the same folder (say C:\Data) being careful to ensure that it’s only critical data as these files will be sent over the wire for each backup

- Create a Powershell script that runs as a Windows scheduled task that on each execution compresses the contents of C:\Data and sends it to the FTP site

The last problem we need to solve is how do we know if our backups are valid. As the saying goes, "no one cares about backups. Everyone cares about restores.". Wouldn’t it be great if we could automate the process of validating these files and perhaps even get an email telling us the status so we can have that warm and fuzzy feeling? You’re in luck.

Below is a modified script that I use to automatically validate my backups. Now it’s important to note that I’ve gone and ripped out a bunch of stuff from the code so the script below is not tested in any way so use at your own risk. But if nothing else, I suspect it’ll be really useful from a learning perspective. For example, I never found a really simple way to do FTP of multiple files through Powershell so I ended up coming up with the solution below largely through piecing together lots of different blog posts.

The script demonstrates a few different things:

- How to use encryption to protect passwords inside a script

- Use 7zip to automatically test an archive

- Generate HTML color coded log file and email the results

# Creates compressed backup of critical folder, FTPs it and verifies the backup

# 7Zip; Email; FTP; Verify;

#Region Variables that control the function of the program

$FTPServer = "ftp://nas2u.dyndns.org/backups/"

$FTPUsername = "backupuser"

$FTPCred = "password1"

$FilesToGet = @("backup.7z", "otherfile.zip")

$DownloadFolder = "C:\Temp"

$7ZipCred = "password2"

$7ZipCmd = "c:\progra~1\7-Zip\7z.exe" # Doesn't like the space in Program Files and I can't find a way around it

$OutputLogFile = "c:\Data\verifylog.html"

$DateNow = Get-Date

$objLogData = @()

#Endregion

#region Code used to work with Encrypted passwords -- not used in this script but included as a reference

#Run one time to create the encrypted password file then remove this line

#Read-Host -Prompt "7Zip Password" -AsSecureString | ConvertFrom-SecureString | Out-File c:\temp\7ZipCred.txt

# $7ZipCredFile = "c:\Creds\7zipcred.txt"

# $7ZipCred = (New-Object System.Management.Automation.PSCredential -argumentlist "Fake", (get-content $7ZipCredFile | convertto-securestring)).GetNetworkCredential().Password

#EndRegion

#Region Function to Create a Custom Log File

Function AddLogEntry($var1, $var2, $var3, $var4)

{

$var1 = $var1.trim() # Remove any additional white space

#Script is used instead of Global. Global works for me but not when run as scheduled task

$Script:objLogData += New-Object PSObject -Property @{LogMessage = $var1; BackupValid = $var2; LogDate = $var3; FilePath = $var4}

}

#EndRegion

#Region Function to Send Myself a Status Email

function SendStatusEmail($LogContents)

{

$smtpServer = "smtp.server.net"

$msg = new-object Net.Mail.MailMessage

$smtp = new-object Net.Mail.SmtpClient($smtpServer)

$msg.From = "source@domain.com"

$msg.To.Add("destination@domain.com")

$msg.IsBodyHTML = $true

$msg.Subject = "Backup Report"

$msg.Body = $LogContents

$smtp.Send($msg)

}

#EndRegion

#Region Grab files from FTP

$webclient = New-Object System.Net.WebClient

$webclient.credentials = new-object system.net.networkcredential($FTPUserName, $FTPCred)

ForEach($File in $FilesToGet)

{

$uri = New-Object System.Uri("$FTPServer$File")

try { $webclient.DownloadFile($uri, "$DownloadFolder\$File") }

catch [Exception] { AddLogEntry "$_" $false $DateNow $FTPServer$File}

}

$7ZFiles = gci "$DownloadFolder\*.7z" | select FullName

if(($7ZFiles -eq $null)) { AddLogEntry "No 7Zip file found. Nothing to Scan." $false $DateNow }

#EndRegion

#Region Verify 7zip file and update log file

ForEach($7Zfile in $7ZFiles.FullName)

{

Invoke-Expression -command "cmd /C '$7ZipCmd t -p$7ZipCred `"$7Zfile`"'" | Out-Null

if($LASTEXITCODE -eq 0) { AddLogEntry "Verify Success" $true $DateNow $7Zfile }

Else

{ AddLogEntry "Unable to open .7Z File" $false $DateNow $7ZFile }

}

#EndRegion

#region Export Log Data to Color coded HTML

if(Test-Path $OutputLogFile) { $OriginalLogFile = Get-Content $OutputLogFile }

if(Test-Path $OutputLogFile) { Remove-Item $OutputLogFile }

ForEach($Entry in $objLogData)

{

if($Entry.BackupValid -eq $true)

{ $FontColor = "Green"; if(Test-Path $Entry.FilePath) { Remove-Item $Entry.FilePath } }

Else

{ $FontColor = "Red" }

$OutMessage = "<FONT COLOR=$FontColor>" + [string]$Entry.LogDate + " " + $Entry.LogMessage + " " + $Entry.FilePath + "<BR></FONT>"

Add-Content $OutputLogFile $OutMessage

}

Add-Content $OutputLogFile "<BR>"

Add-Content $OutputLogFile $OriginalLogFile

#EndRegion

#Region Email Log to myself and if the files were good, delete the copies I downloaded

#SendStatusEMail((Get-Content $OutputLogFile))

#EndRegion